Train your model on PALMA II

In this chapter we will show you how to train your model on the High Performance Cluster of the University of Münster.

The login process for the HPC is presented in a shortened (but sufficient) form in this tutorial. For more information on how the HPC works, there is a detailed wiki at High Performance Computing.

0. Registration on PALMA

To get access to the HPC, you (or your university ID) must be assigned to the user group u0clstr. You can register yourself in the user group, this is done via the IT-Portal of the University of Münster.

To do this, go to “Uni-Kennung und Gruppenmitgliedschaften” in the IT portal on the left and then click on “… Apply for a new account”.

In the dropdown that now appears, search for the user group “u0clstr” and apply for membership.

You may have to wait up to 24 hours for all group memberships to be updated on the HPC systems. So please wait one day before continuing.

1. Login to PALMA

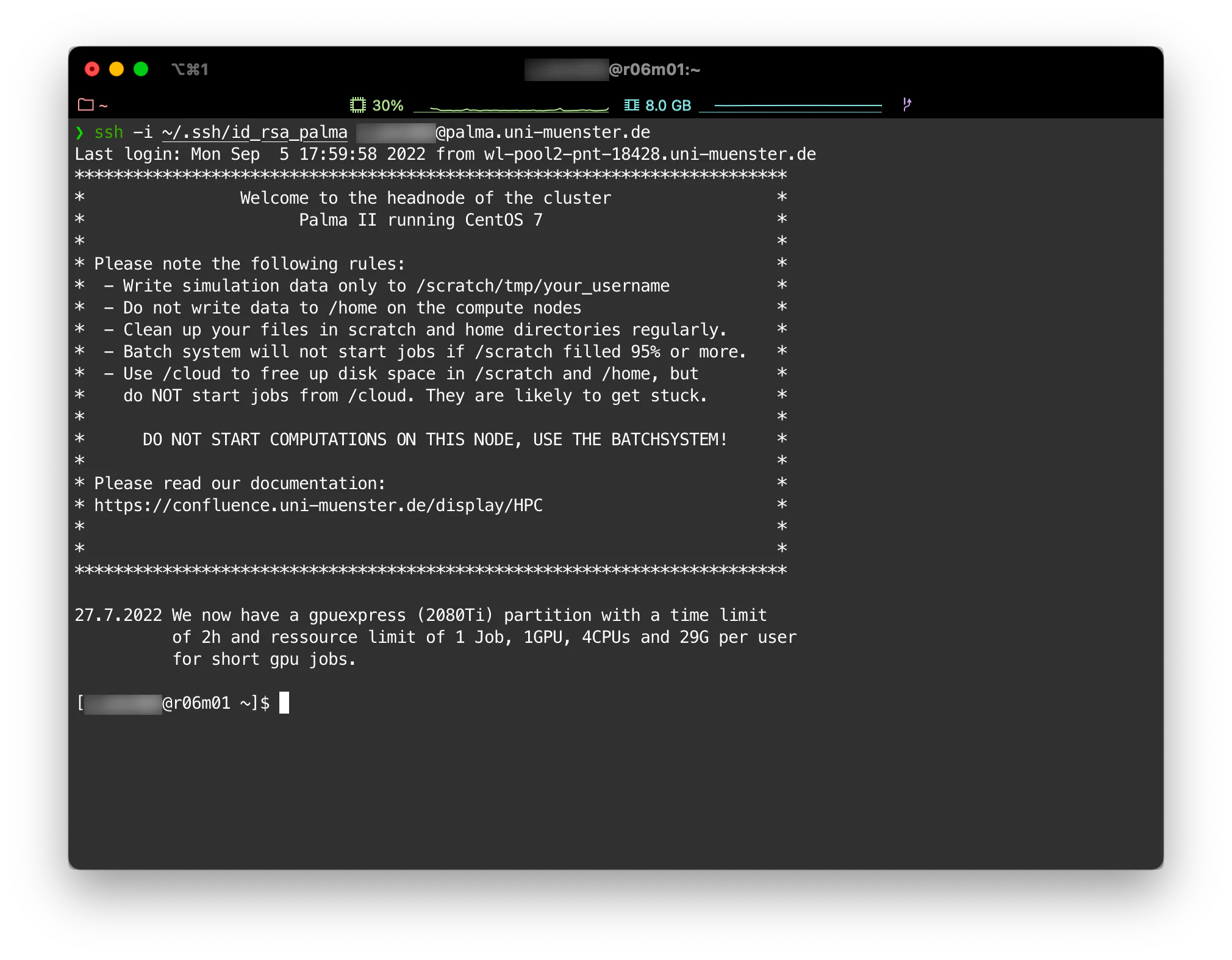

After registering, you can simply connect to PALMA via SSH. The command is as follows:

ssh <username>@palma.uni-muenster.de

In this case, the standard SSH key is used by your system to log in.

Further options for SSH access

If you want to use a special private key, either specify it explicitly in the command using the -i argument:

ssh -i ~/.ssh/<private_key_file> <user_id>@palma.uni-muenster.de

Alternatively, you can also tell your command line that you always want to use a special key on PALMA. To do this, add a host in the ~/.ssh/config file:

# Default

Host *

AddKeysToAgent yes

IdentityFile ~/.ssh/id_rsa

# For PALMA

Host palma.uni-muenster.de

AddKeysToAgent yes

IdentityFile ~/.ssh/<palma_key>Now you should see that you are logged in to PALMA.

Important!

You are now on the head node of PALMA. Please do not start any jobs or calculations from here (e.g. never use the python command to execute a file, but use the job system, see below). This node is only designed for logging in and scheduling work. There is a job system (slurm) which is used to register calculations on PALMA, so to speak. slurm then ensures that the requested resources are actually free and executes the task on a free node. Please read the following sections or the HPC instructions: Getting started

2. My data on PALMA

In order to be able to calculate on PALMA, you must of course ensure that your software and any data required for execution (e.g. training data) are available in the system. There are numerous ways to do this, we will briefly present the most common ones here.

| Path | Purpose | Available on | Notes |

|---|---|---|---|

| /scratch/tmp/<username> | Temporary storage for input and output of your software | Login and compute nodes | Persistent storage, please take care to download your results regularly and clean up the folder to avoid wasting disk space. No backups are made of this system! This directory is automatically set as a $WORK variable. |

| /mnt/beeond | Temporary local storage | Compute Node | Suitable for temporary data that is generated during the calculation. |

| /home/[a-z]/<username>, example: /home/m/m_must42 | Memory for scripts, binaries, applications etc. | Login and compute nodes | No mass storage for data! Very limited capacity. You should store your software here. This directory is automatically set as $HOME. |

| /cloud/wwu1/<projectname>/ <sharename> | Cloud-Storage/Shares | Login (r/w) and Compute Nodes (r) | Optimal e.g. for training data. To create a share, take a look at the Cloud Storage Solutions of the University of Münster and create it via the OpenStack (see chapter on OpenStack here in Incub.AI.tor) |

2.1 Copy my code to PALMA

The easiest way to make your code available on PALMA is to clone your Git repository in the home folder. This also allows for easy updates should you need to further develop your scripts or fix bugs.

To clone our code, we simply run the clone command:

cd /home/[a-z]/<username>

git clone https://zivgitlab.uni-muenster.de/reach-euregio/incubaitor.git

Now your code is available in the home folder as a Git repository, you can use

git pull

to apply new changes from the Git server, but of course you can also commit changes that you may make directly on PALMA.

Info: If you are working in a private repository, you may need to log in to PALMA in Git, either using a token or an SSH key. We will add this part here soon.

IMPORTANT: Please do not yet execute your code as you are used to from your local computer. We will come to the execution of code on PALMA in section 3.

2.2 Making other data available on PALMA

To make other data available for your scripts (e.g. a training data set), you can use the scp command, which copies files on a Linux system analogous to cp, but also works with remote computers. For example, if we want to copy our training csv to PALMA, we can work locally with the following command:

scp -r /path/to/local/files <username>@palma.uni-muenster.de:/scratch/tmp/<username>/,

where we replace /path/to/local/files with you path to the training csv file and <username> with your username.

Furthermore, we can include a usershare, which we can create in OpenStack (see chapter on OpenStack) and also use elsewhere.

3. job script and modules

Some software is already installed on PALMA and can be called up and used via environment module. Since many modules, libraries and other software are dependent on other libraries, this system is handled in PALMA in so-called toolchains. A toolchain is simply a combination of compilers and libraries, currently there are foss (free and open source software) and intel (proprietary).

An overview of the available toolchains can be viewed here.

A toolchain can be created using the command

module load <palma software stack>

module load <toolchain>

# example:

module load palma/2020a

module load intel/2020aThen use the command

module avail

to see a list of the available libraries.

As PALMA works with a batch system and the computing capacities with the calculations (jobs) are allocated centrally, we have to tell the system in a small script which resources and which software we need for our job. The name of the *.sh file is relatively unimportant; it can be called job.sh or job_carprice.sh, for example, if there are different files.

In our use case, the job.sh file could look like this:

#!/bin/bash

## looks like comments, but this are not comments!

#SBATCH --nodes=1 # the number of nodes you want to reserve

#SBATCH --ntasks-per-node=1 # the number of tasks/processes per node

#SBATCH --cpus-per-task=4 # the number cpus per task

#SBATCH --partition=normal # on which partition to submit the job

#SBATCH --time=1:00:00 # the max wallclock time (time limit your job will run)

## This small example training can be done on CPUs.

## If you need GPUs:

##SBATCH --gres=gpu:1

##SBATCH --partition=gpuv100

#SBATCH --job-name=IncubAItor_DEMO # the name of your job

#SBATCH --mail-type=ALL # receive an email when your job starts, finishes normally or is aborted

#SBATCH --mail-user=your_account@uni-muenster.de # your mail address

# LOAD MODULES HERE

module load foss/2020a

module load TensorFlow/2.3.1-Python-3.8.2

module load matplotlib

module load scikit-learn

# START THE APPLICATION

srun python3 /home/<first letter of username>/<username>/incubaitor/2_1_PALMA/app/train.pyIMPORTANT: Customize the file accordingly before executing it on PALMA!

First we tell the system how many nodes we want to reserve for our job, then we enter further details such as the number of tasks/processes per node, the number of CPUs, the maximum execution time etc. As we are only performing a small machine learning task, we do not need a GPU, but this can also be reserved if we need GPUs. Finally, we enter a few job details and our e-mail address, to which the scheduling system sends notifications when the status of our job changes.

INFO: Sometimes it is difficult to estimate how many resources you need for a job. Don’t be afraid to try things out a little or to reserve too many resources. However, the more resources you reserve, the more likely it is that you will have to wait a little until your job starts, as the required resources may still be reserved elsewhere.

We now have the job script that describes our task in detail and are ready to run. You will need the following commands, among others:

| Command | Task |

|---|---|

sbatch job.sh | Add your job to the waiting list. |

squeue -u <user> | Show all jobs of a user. |

squeue -u <user> -t RUNNING | Show all running jobs of a user. |

squeue -u <user> -t PENDING | Show all waiting jobs of a user. |

scancel <job-id> | Cancel a specific job. |

scancel -u <user> | Cancel all jobs of a user. |

scancel --name <jobname> | Cancel all jobs with this name. |

Once you have executed your job, you will find the log in a *.txt file so that you can track any errors.

4. incub.AI.tor example

You can now customize the example. If you have copied the example to your home directory as shown in section 2, you can customize the job.sh file using the editor vim, for example (vim is not very user-friendly!), or first make the customization in an editor of your choice and replace it using scp (see 2.2).

The example requires the data in your workdir directory. The data used here comes from Kaggle used car dataset. You can download this locally and then either add it to your local repository or load it directly into your working directory using scp (here the file has already been unpacked and the folder renamed to data):

Execute the following command locally on your computer:

scp -i $HOME/.ssh/id_rsa_palma -r data <username>@palma.uni-muenster.de:/scratch/tmp/<username>/

Then this folder is in the working directory and we can view it on PALMA:

cd $WORK/data

ls

All data records should now be listed. Now we should be able to copy the job and start it:

cd $WORK

cp $HOME/incubaitor/2_PALMA/2_1_PALMA/job.sh $WORK

sbatch job.sh

The job is given a jobid, which is displayed to you. With the squeue command described above, we can check whether the job is being executed. We can also look at the output in the slurm file (vim slurm-<jobid>.out). When the job is finished, you should have a folder models in the working directory, which you can download locally on your computer as follows:

scp -i $HOME/.ssh/id_rsa_palma -r <username>@palma.uni-muenster.de:/scratch/tmp/<username>/models models

scp -i $HOME/.ssh/id_rsa_palma <username>@palma.uni-muenster.de:/scratch/tmp/<username>/slurm-<jobid>.out slurm.txtIn slurm.txt you can now see the entire Python output as it would have been normally under Python (including errors).

Last but not least: Log out of PALMA again by entering the command exit on PALMA!